Back to blog

Back to Case Studies

Agentic Workflows

.avif)

Building Agentic Workflows for Analytics

The promise of AI-powered analytics has remained largely unfulfilled. Dashboards are static, data teams are overloaded, and ‘self-service BI’ is more myth than reality. But that’s changing. This blog explores what large language models can do, the opportunities they create, and how to unlock their potential for self-service analytics and insight generation from structured datasets.

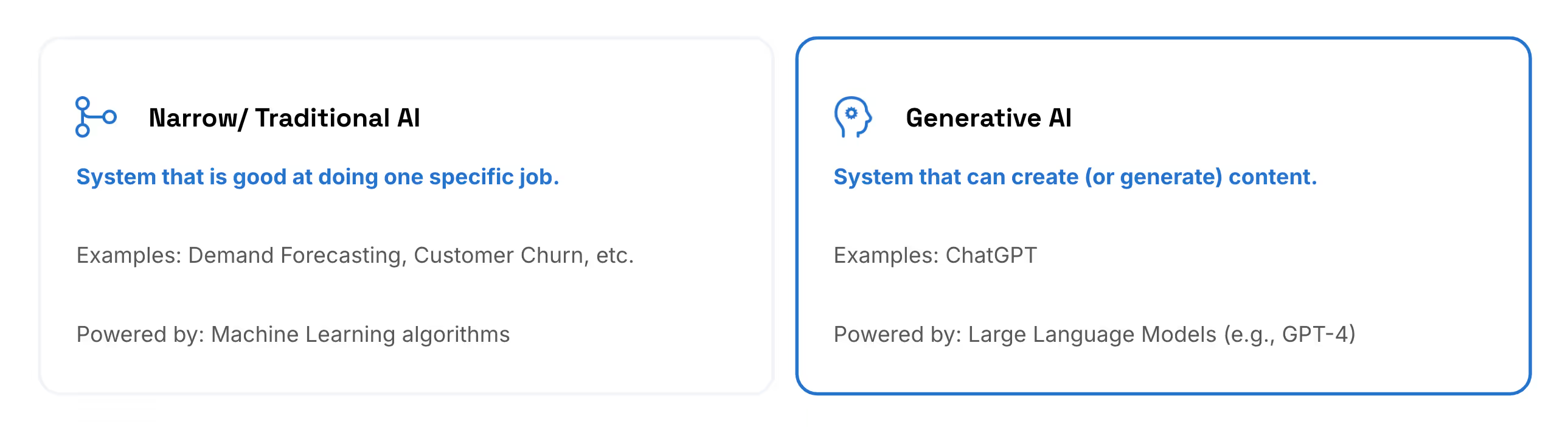

Setting the Stage: Narrow AI vs. Generative AI

Artificial intelligence (AI) has rapidly evolved over the last decade, with two primary categories shaping the enterprise landscape:

- Narrow (Traditional) AI refers to systems designed for specific tasks, such as demand forecasting, fraud detection, or recommendation engines. These models excel in well-defined environments but lack adaptability.

- Generative AI, powered by large language models (LLMs) like GPT-4, Claude, and others, introduces a new paradigm—one that can generate content, write code, analyze text, and even reason through problems. Unlike Narrow AI, Generative AI can operate across various domains, creating more dynamic and flexible applications.

This evolution has sparked interest in how LLMs can be applied beyond text generation—particularly in enterprise analytics, where businesses are searching for ways to make data more accessible. The idea is simple: instead of relying on SQL queries or static dashboards, what if users could simply ask questions in natural language and get meaningful, real-time insights?

But before diving into the challenges and opportunities of applying LLMs to analytics, it's important to understand their core capabilities.

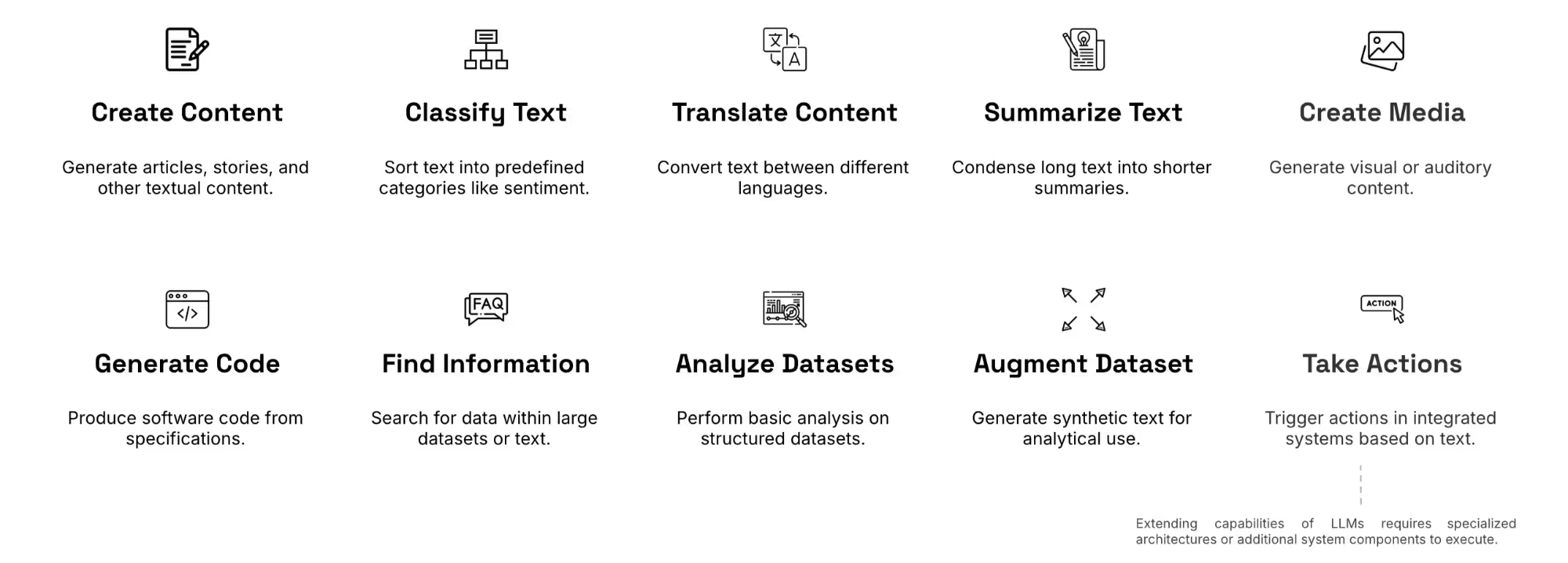

What Can Large Language Models (LLMs) Actually Do?

LLMs have demonstrated proficiency across a set of capabilities, including:

- Create Content – Generate articles, stories, and other textual content.

- Classify Text – Sort text into predefined categories like sentiment.

- Translate Content – Convert text between different languages.

- Summarize Text – Condense long text into shorter summaries.

- Create Media – Generate visual or auditory content.

- Generate Code – Produce software code from specifications.

- Find Information – Search for data within large datasets or text.

- Analyze Datasets – Perform basic analysis on structured datasets.

- Augment Dataset – Generate synthetic text for analytical use.

- Take Actions – Trigger actions in integrated systems.

Use Case: The Opportunity to Use LLMs for Data & Analytics

Organizations that capitalize on any one of these capabilities to streamline existing workflows can unlock significant efficiency gains. A logical area emerges in data and analytics where businesses are still spending excessive time manually coding in SQL and/or Python—making it a prime candidate for disruption.

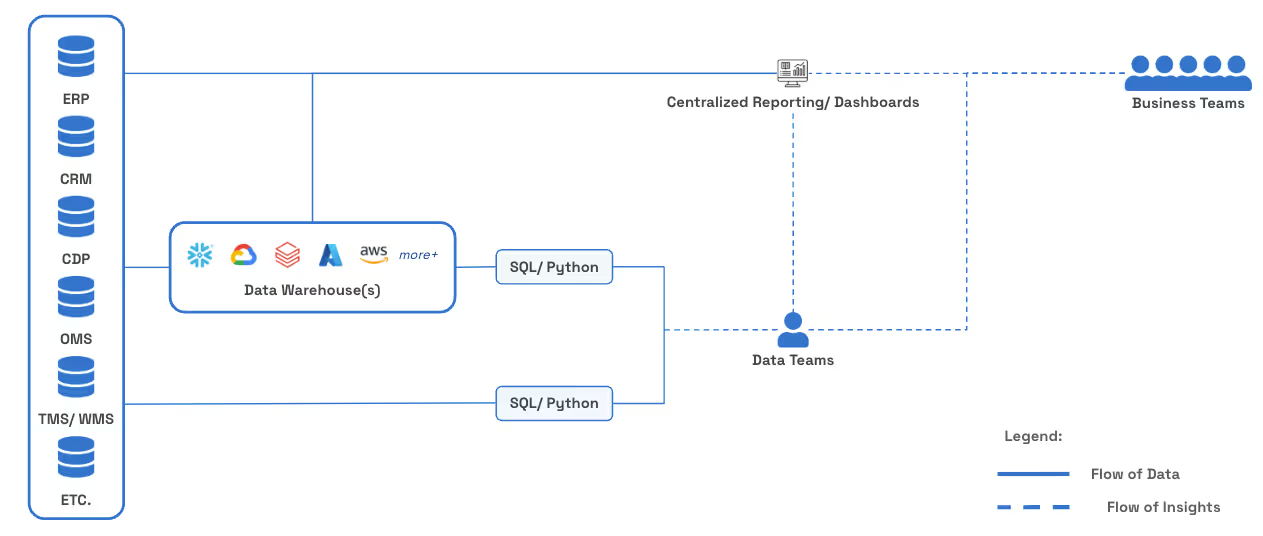

Business intelligence, as we know it today, is fundamentally broken. The term is often synonymous with dashboards, but more dashboards don’t necessarily lead to more insights. In fact, an overabundance of dashboards often creates confusion and erodes trust in the data. The real issue isn’t a lack of centralized dashboards, but rather a fundamental flaw in our perceptions of their intended use - they are not a catch-all solution for data-driven decision-making.

That said, centralized dashboards are a great starting point. They offer a broad, aggregated view of performance, making it easier for non-technical users to monitor key metrics. They lower the barrier to data access and provide an essential foundation for understanding trends at a high level. However, the insights that truly drive decisions—the ones that uncover inefficiencies, optimize operations, and create competitive advantages—still require manual deep dives into spreadsheets or writing SQL/Python queries. This task is typically left to already overwhelmed data teams, who are constantly fielding ad-hoc requests from business teams desperate for custom insights. This bottleneck is more than just an operational inefficiency; it represents a massive opportunity cost—where valuable insights remain buried in raw data, waiting to be extracted and acted upon.

Dashboards were never meant to replace deep analysis; they were meant to serve as a launchpad for exploration. Yet, many organizations attempt to enable “self-service” analytics by simply creating more dashboards, leading to what we call dashboard anarchy—a graveyard of outdated, underutilized, and often contradictory dashboards that ultimately erode trust in the data. Companies that fall into this trap risk losing their ability to harness data effectively, making decision-making increasingly difficult in a world where data-driven agility is non-negotiable.

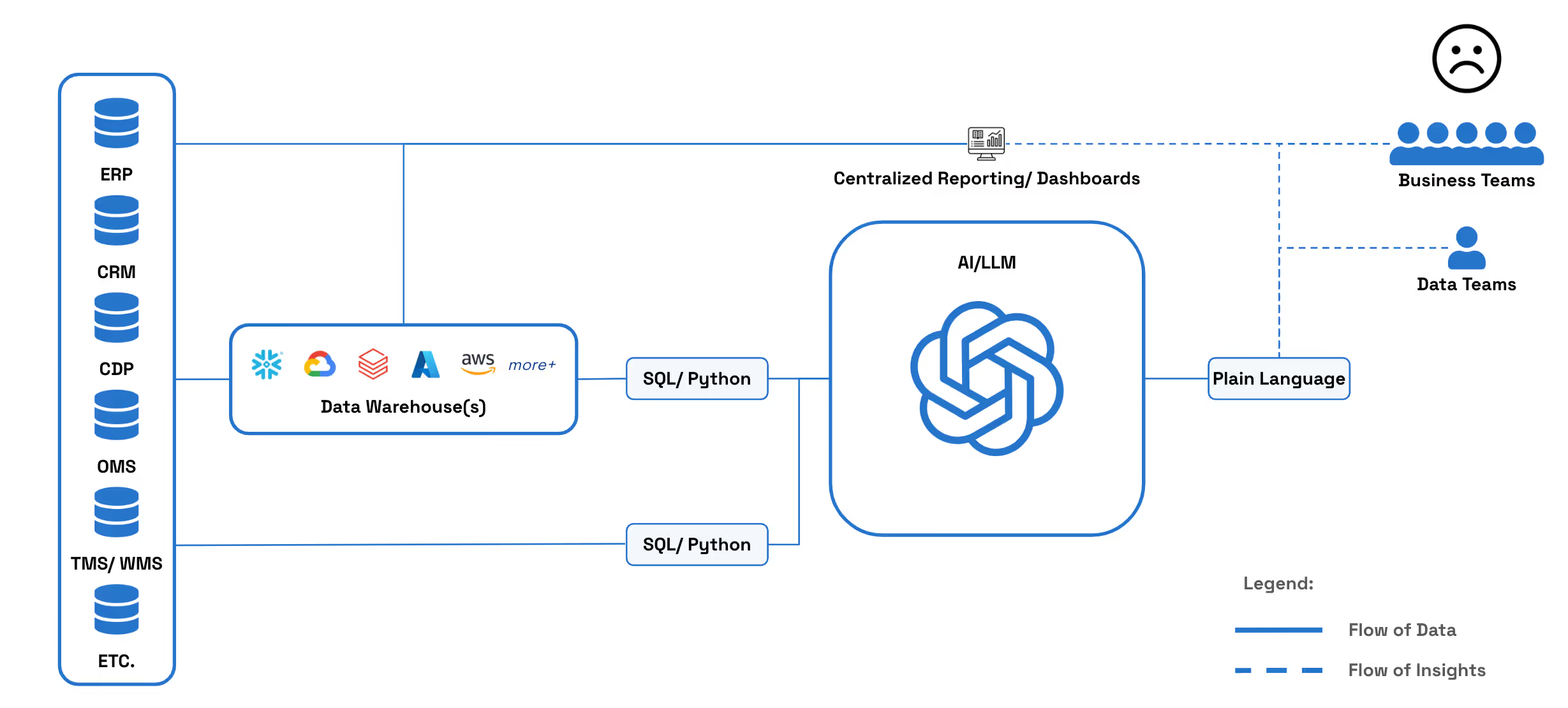

This is where LLMs change the game. Their promise in analytics is simple but transformative: allow users to query data in plain language and extract custom insights—without needing SQL or Python expertise.

The real question is no longer whether LLMs can help but how organizations can deploy them effectively. Without the right architecture, LLMs risk generating inconsistent, misleading, or outright incorrect insights due to missing business context and technical limitations. To achieve real impact, businesses must integrate LLMs into structured workflows that ensure accuracy, context-awareness, and seamless execution.

Organizations today are exploring whether LLMs can bridge this gap—but real-world implementation presents significant challenges.

The Current State of Data & Analytics at Mid-to-Large Organizations

Most companies today operate within rigid, outdated data workflows that limit accessibility and slow decision-making. Despite major investments in business intelligence tools, organizations still struggle to extract timely, actionable insights:

- Manual spreadsheet analysis is still widespread, introducing inefficiencies, version control issues, and human error.

- Static dashboards are built for specific use cases, offering limited flexibility for ad hoc exploration.

- Dashboards provide historical snapshots but fail to offer real-time, interactive analytics for decision-making at the moment of need.

- Dashboards aggregate data at a high level due to performance constraints, masking anomalies and exceptions while limiting drill-down capabilities.

- Business users remain dependent on data teams for even basic queries, leading to bottlenecks and delays.

- Highly skilled analysts and engineers spend too much time manually pulling, cleaning, and transforming data instead of focusing on higher-value work.

- Critical insights remain buried within complex ERP and supply chain systems, requiring extensive SQL work just to retrieve relevant data.

- The average time to actionable insights? A staggering 7+ days—far too slow for today’s fast-moving businesses.

This inefficiency is more than just a workflow problem—it’s a competitive disadvantage. Organizations that fail to evolve beyond traditional dashboards and manual data processes risk falling behind as the demand for real-time, AI-driven analytics accelerates.

Supplementing LLMs with Basic Context is not the Answer

Unfortunately, you can’t simply connect LLMs to structured databases and expect magic—LLMs need context about about data models, relationships, and business logic to generate meaningful insights.

Most organizations attempting to integrate LLMs into their data systems rely on text-to-SQL models that convert natural language prompts into queries using only basic metadata (e.g., table and field names) retrieved via a simple top-K retrieval-augmented generation (RAG) mechanism. While this method may improve over a purely generative approach, it has serious limitations when dealing with structured enterprise data.

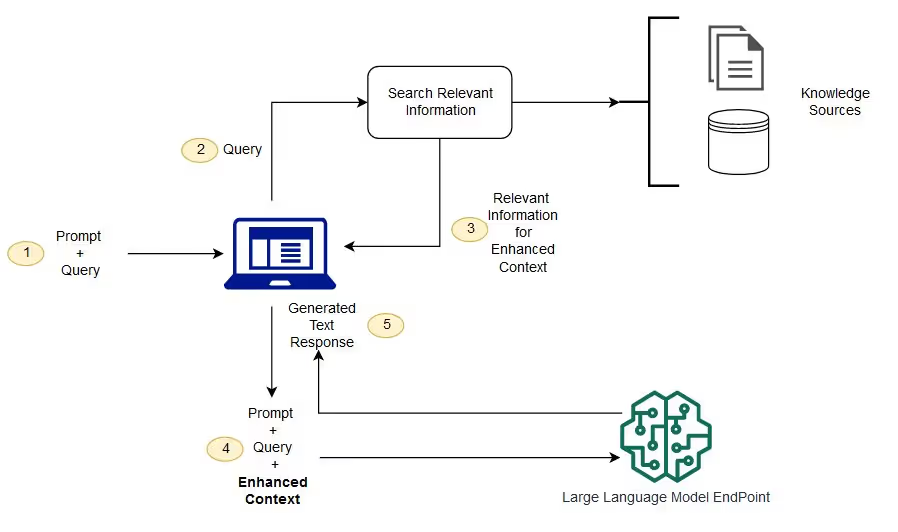

What is RAG?

Retrieval-Augmented Generation (RAG) is a technique that enhances large language models (LLMs) by allowing them to pull in authoritative, external knowledge before generating responses. While LLMs are trained on vast datasets, they don’t have real-time access to proprietary or domain-specific knowledge. RAG helps bridge this gap by retrieving relevant context from an external knowledge base before the model generates an answer—improving accuracy, relevance, and domain specificity without requiring model retraining.

How Does RAG Work?

The most common approach (recommended by Open AI) involves cosine similarity to match user queries with the most relevant pieces of stored knowledge. Here’s a simplified version of the process:

- The user’s query is converted into a vector representation.

- Contextual data is also vectorized.

- The system retrieves the top K most relevant pieces of context based on similarity scores.

- The retrieved context is then passed to the LLM before generating a response.

While effective for retrieving unstructured information (e.g., documents, FAQs), this approach struggles with structured datasets where context is inherently more complex.

Why Basic RAG + Text-to-SQL Fails in Enterprise Analytics

Most RAG implementations retrieve only basic metadata (e.g., table and field names), which leads to incomplete or incorrect SQL generation due to:

- Vague Table Names – Ambiguous names provide no clear meaning.

- Vague Field Descriptions – Poorly labeled fields lead to misinterpretation.

- Unclear Table Relationships – Missing relationships make joins unreliable.

- Lack of Business Context – Metrics often have nuanced definitions.

- Tribal Knowledge – Critical insights exist only in employees' heads.

And even if LLMs could perfectly generate SQL, a competent human analyst does far more:

- Ask clarifying questions – Most user queries are vague.

- Debug errors – Identify and fix issues like divide-by-zero errors.

- Summarize insights – Distill complex results into key takeaways.

- Explain assumptions – Make implicit logic transparent.

- Provide step-by-step reasoning – Show how insights were derived.

- Generate visualizations – Turn raw data into compelling visuals.

- Suggest actions – Provide recommendations, not just numbers.

And finally, while effective for retrieving information from unstructured data, this method has drawbacks when dealing with structured datasets:

- Overlapping scores – Many pieces of context may have similar relevance scores, making it hard to isolate the most useful one.

- Arbitrary selection of K – Setting K too low risks missing critical context, while setting it too high can overload the LLM with excessive information, increasing the risk of hallucinations or irrelevant answers.

This is why naive “talk to your data” approaches fail—and why even major players like Snowflake Copilot and Microsoft’s AI-driven BI tools have struggled with adoption. Simply generating SQL queries isn’t enough. Learn more about the landscape of AI tools for data analysis here.

Example of Limitations stated in Enterprise Platform Documentation

.avif)

CoPilot directly indicates that the current platform cannot understand complex intent. This is the problem of "Context-sensitive ambiguity" where the meaning varies depending on context (this context is often undocumented or exists as tribal knowledge). A basic illustration can be seen in the question, "How many sales this month?" this could refer to revenue, profit, or units sold, depending on the situation. Without clear context, the meaning remains contextually indeterminate, leading to potential misinterpretations or incomplete insights.

.avif)

Snowflake Copilot acknowledges its current limitations in understanding complex data models. It may generate SQL queries that contain invalid syntax (non-executable) or reference non-existent tables and columns.

Unlocking the full potential of LLMs in analytics requires a far more structured, agentic approach—one that moves beyond simple text-to-SQL conversions.

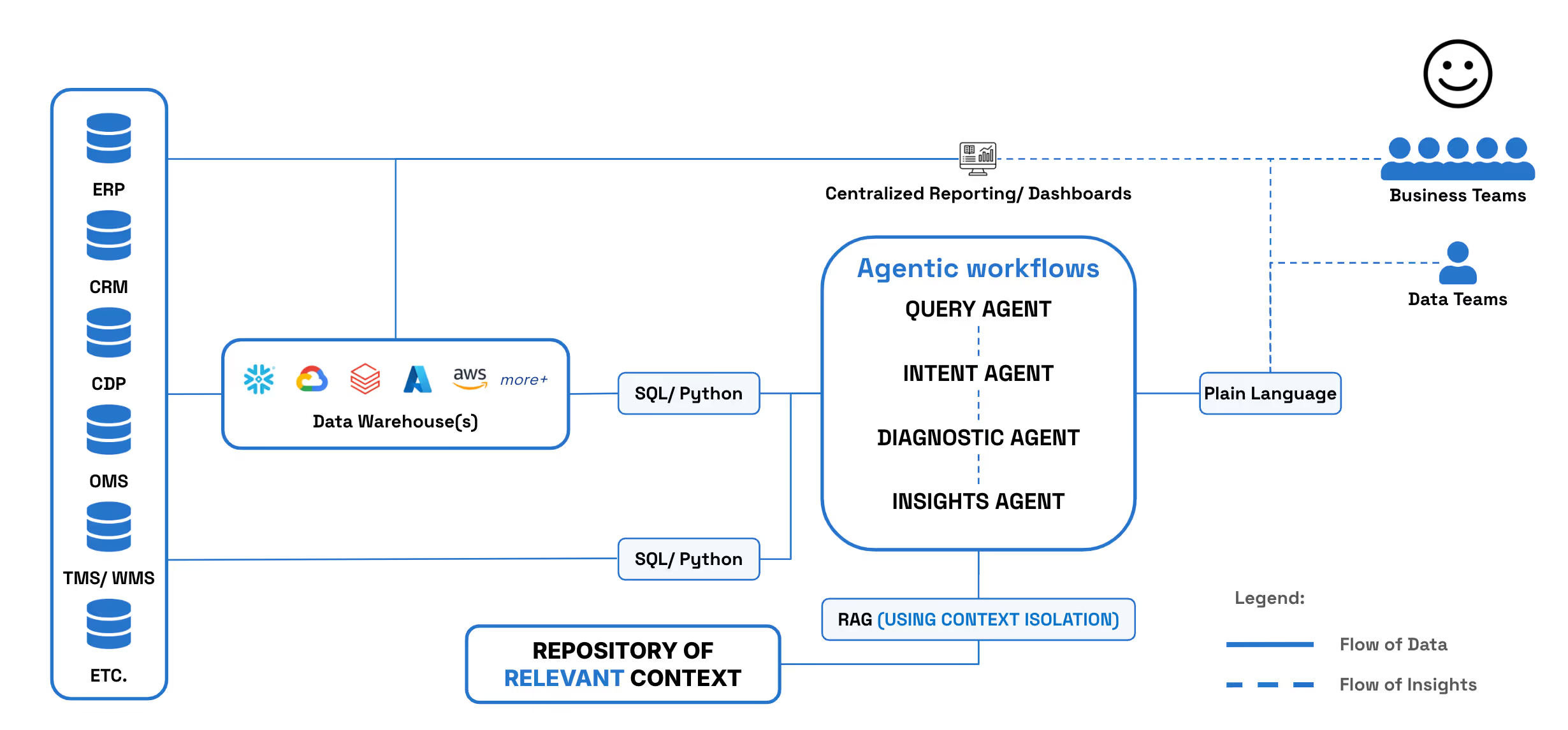

The Path Forward: Agentic Workflows + Intelligent Context Retrieval = Best in Class Performance for Analytics

The key to solving these challenges lies in agentic workflows—the orchestration of multiple specialized AI agents, each responsible for a distinct function. These agents work collaboratively, communicating recursively to refine outputs, much like a competent human data analyst would.

A structured multi-agent system ensures that every step of the analytical workflow is handled efficiently:

- Intent Agent – Clarifies what the user actually wants based on historical patterns.

- Context Agent - Retrieves relevant context needed to generate a SQL/ Python query.

- Query Agent – Generates SQL/ Python needed to answer users questions.

- Diagnostic Agent – Diagnoses errors in generated queries and suggests corrections.

- Evaluation Agent - Evaluates the output generated for validity and relevance.

- Insights Agent – Summarizes key takeaways and recommends next steps.

- Action Agent – Allows users to take action on recommendations.

However, the effectiveness of the agents depends entirely on the quality and depth of context they can access. A well-designed agentic workflow must be coupled with a smarter context retrieval mechanism—one that goes beyond static metadata and actively curates a rich knowledge repository. This includes:

- Semantic layers – Capturing relationships between tables, KPIs, and business logic to maintain contextual integrity.

- Industry-specific terminology – Mapping domain-specific language to ensure precise query interpretation.

- Custom business logic – Embedding proprietary formulas, operational constraints, and strategic definitions.

Rather than relying on top-K retrieval, agentic workflows introduce context isolation, where a dedicated Context Agent actively reviews and refines retrieved information before passing it to the LLM. This ensures the model receives exactly the right amount of context, preventing both undersupply (hallucinations) and oversupply (forced-fitting).

By combining agentic workflows with intelligent context retrieval, organizations move beyond static dashboards and manual SQL queries, unlocking a true self-service analytics experience that delivers precise, contextual, and actionable insights on command.

This approach doesn’t just improve efficiency—it fundamentally redefines how organizations interact with data. Instead of being overwhelmed with ad-hoc requests, data teams become orchestrators of intelligent systems, managing and refining workflows that autonomously generate insights at scale.

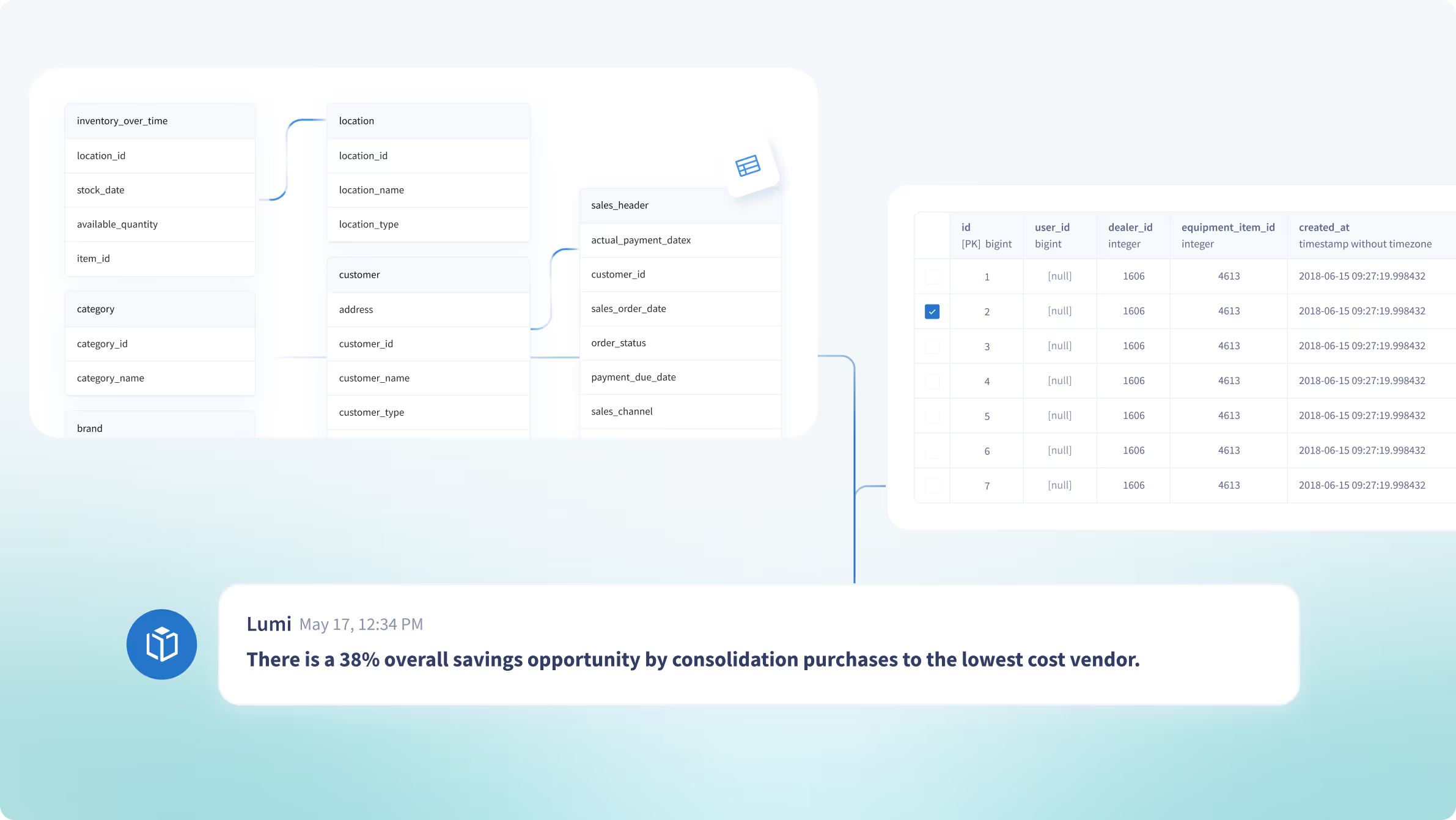

Introducing Lumi AI: The Future of Data & Analytics

For almost 2 years, Lumi AI has been pioneering agentic workflows for analytics—long before it became an industry buzzword. Designed to mimic the capabilities of a competent human analyst while handling enterprise-scale complexity, Lumi is an AI-powered analytics platform that enables teams to explore structured data, generate reports, and extract custom insights through a simple chat interface—no SQL or Python needed. By unlocking hidden value, boosting productivity, and freeing up data teams for more strategic work, Lumi redefines how organizations interact with their data.

We’ve worked closely with enterprise customers to uncover what’s truly needed to make AI-driven analytics work in the real world. We’ve tackled countless edge cases, explored every rabbit hole, and built a system that delivers best-in-class performance—quantifiably proven in head-to-head experiments against Snowflake and ThoughtSpot, using the same datasets and evaluation questions:

Building Lumi AI: Key Takeaways & Lessons Learned

We’re proud of what we’ve built—not just as pioneers in this space, but as thought leaders driving real-world adoption and setting a new standard for AI-powered analytics. Our journey has just begun, and we're continuously pushing the boundaries of what’s possible.

We understand that:

- Humans are vague ← Systems that guide users toward effective prompting will win.

- Faster context definition = faster time to value ← Streamlining this process is key.

- Strong semantic layers drive better outcomes ← Well-defined structures outperform guesswork.

- Balancing context is an art ← Too little increases the risk of hallucinations, too much leads to forced-fitting.

- Multi-agent architectures outperform single LLM setups ← Specialized agents create more reliable workflows.

- AI will only improve ← This is the worst it will ever be—smarter, faster, and cheaper is inevitable.

- Data security is paramount – IT teams need control, transparency, and peace of mind.

Organizations that embrace agentic workflows for analytics will unlock faster insights, better decision-making, and a competitive edge in a data-driven world.

To learn more, reach out for a demo. 🚀

Make Better, Faster Decisions.

.avif)

.avif)